Ewen Bell 📸

ewen@social.ewenbell.com– People who understand a field of expertise deeply are unimpressed by LLM output

– Some people love convenience so much they really want to believe LLM will solve the problems they don't want to address with their own effort.

– Tech Bros love the AI fantasy precisely because they don't respect the expertise of others, only themselves, and precisely because they love the allure of shortcuts to problems instead of doing the work to find real solutions.

... For some people, any "answer' is a good answer. For others, the right answer really matters. I wonder what happens to an organisation when you remove all the humans who care about the right answer, and replace them with a machine that doesn't?

Lightfighter

Lightfighter@infosec.exchange@ewen crypto, blockchain, NFTs, AI, and now "super-intelligence." Just another grift built upon a potentially somewhat useful technology.

Albert Cardona

albertcardona@mathstodon.xyzThe screenshot is superb ... what an (unwitting?) self-dunk. Plus, who in their right mind would call an LLM "smart". Comprehensive, perhaps, but smart? And Altman can't claim he doesn't understand what he's dealing with, hence he is speaking to mislead in purpose.

Ewen Bell 📸

ewen@social.ewenbell.comI listened to a very thoughtful explanation recently about how wealthy folks talk. How they choose their words.

Regular people feel that if they share something in conversation then it's important they be truthful. That we say what we really feel, and don't misrepresent a situation. Talking is about being helpful as much as expressive.

But the wealthy don't really think like that. Any conversation is rooted in the task of convincing someone to do something for you. The goal is to get what YOU want, nothing else. The very idea of truthfulness or honesty just isn't relevant. You say whatever you need to say to convince your audience. What you say today might totally contradict what you said yesterday, and it doesn't matter.

They see conversation as just winning people over. There's no requirement for truth at any point.

Juggling With Eggs

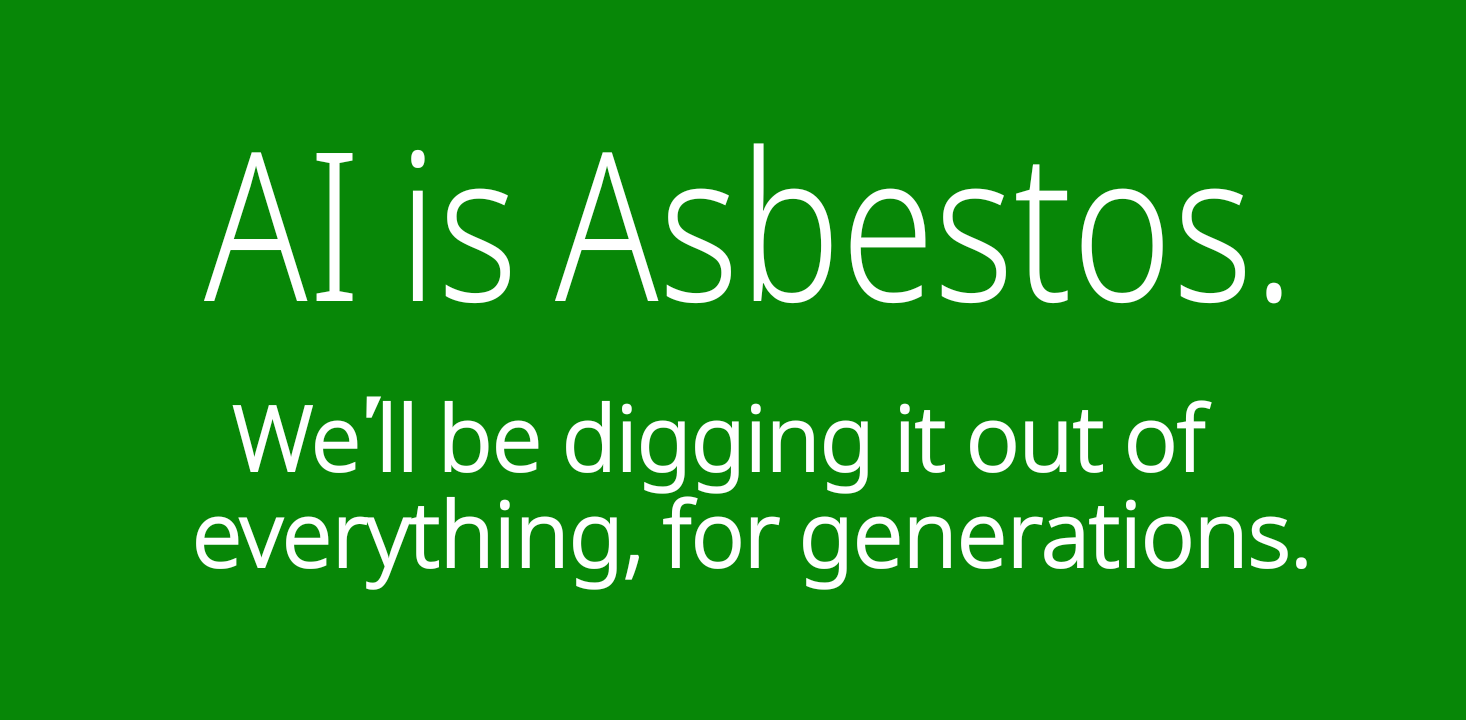

JugglingWithEggs@mstdn.socialI believe this is a quote from Cory Doctorow…I read it this morning and choked on my shreddies. I spend my days making asbestos removal requests based on receiving reports on sampling together with photographs…but yeah one day soon someone will make an economic decision based on a cost/benefit analysis as to whether we need to have humans checking and authorising asbestos removals or if this could all be done by AI.

Steve of Mastodon

marsfield@mastodon.social@ewen @albertcardona Coming at this topic from another angle, the sense of, or lack of, social responsibility:

Morris, A. 2025. What You’ve Suspected Is True: Billionaires Are Not Like Us. 'Rolling Stone' (available on-line: https://www.rollingstone.com/culture/culture-commentary/billionaires-psychology-tech-politics-1235358129/, accessed 6 July 2025).

Studying car driver behaviour the conclusion is "Wealth tends to make people act like assholes, and the more wealth they have, the more of a jerk they tend to be."

Hugs4friends ♾🇺🇦 🇵🇸😷

Tooden@aus.social@ewen IMV, AI - if programmed by an unrelated 5 year old - would always be 'smarter' than Sam.

Rich 🔶UK #RejoinEU

rpin42@mastodon.social@ewen, it’s not good having everyone agree with you on social media.

I’ve been learning to live with Microsoft365 CoPilot at work over the last 2 years. It saves me hours of time. It gave me new capabilities. At a strategic level then, it’s useful. Maybe not detailed decisions… but gathering and summarising evidence it’s brilliant. The important skill is knowing when you can trust it. But that’s the same with humans…

Ewen Bell 📸

ewen@social.ewenbell.comDefinitely seeing different schools of thought on the value of LLM for summaries. They are good at some things I have no doubt, but not everything.

Rich 🔶UK #RejoinEU

rpin42@mastodon.social@ewen particular risk seems to be around health and safety decisions. Also, customer services and cyber security.

Ewen Bell 📸

ewen@social.ewenbell.comI think my general observation is that the people who most want to insert LLM into our lives are not fussed where it ends up. They are the kinds of people who care so much about quality of outcomes.

LLM as a technology is not a big deal. It is what it is. The problem is how it gets applied to our society. Most people affected by it are not given a choice.

@ewen It's completely useless for science. If I were to need a paper by author X, it will absolutely give me a paper of X, existing or not.

An LLM is also bad at summarising nuanced results and contradicts itself in a summary.

Does it help with programming? Sure, but read the code. It's as if a foreign writer with basic understanding of English and a love for puzzles wrote a book. Utterly long and way too complicated!